Big data technologies help analyze diverse data sets that are beyond the capabilities of traditional databases. They help with better and faster decision-making and improve operational efficiency. The market is booming with big data technologies aimed at enhancing business intelligence and processing exceedingly high volume, velocity, and variety of information. Read on to explore the different types of big data technologies, their key features, and what the most popular ones are in 2023.

What is Big Data Technology?

Big data technology is a broad term that encompasses all the tools used for data analytics, data processing, and data extraction. They can handle highly complex data structures and help discover useful patterns and business insights efficiently.

They also offer real-time data handling when combined with other intelligent technologies like the Internet of Things (IoT), machine learning (ML), and artificial intelligence (AI). Combined, these tools are used to achieve several objectives.

Integration

Organizations need to integrate data from various sources and incorporate the available information effortlessly into the existing structure. Big data technologies have the required mechanisms and can help with effective data integration from multiple sources, making the data readily available to users.

Processing

Raw data does not add value unless processed to retrieve usable information. It should be cleaned, organized, and prepared to eliminate redundancy and errors. Big data technologies can create high-quality data for better interpretation and application.

Management and Storage

Big data technologies also ensure efficient data management and storage so that it can serve its purpose when required. They make the information easily accessible for future use.

Solutions

Big data technologies help solve business problems and perform multiple operations, including predictive analytics, visualization, statistical computing, workflow automation, cluster and container management, and more.

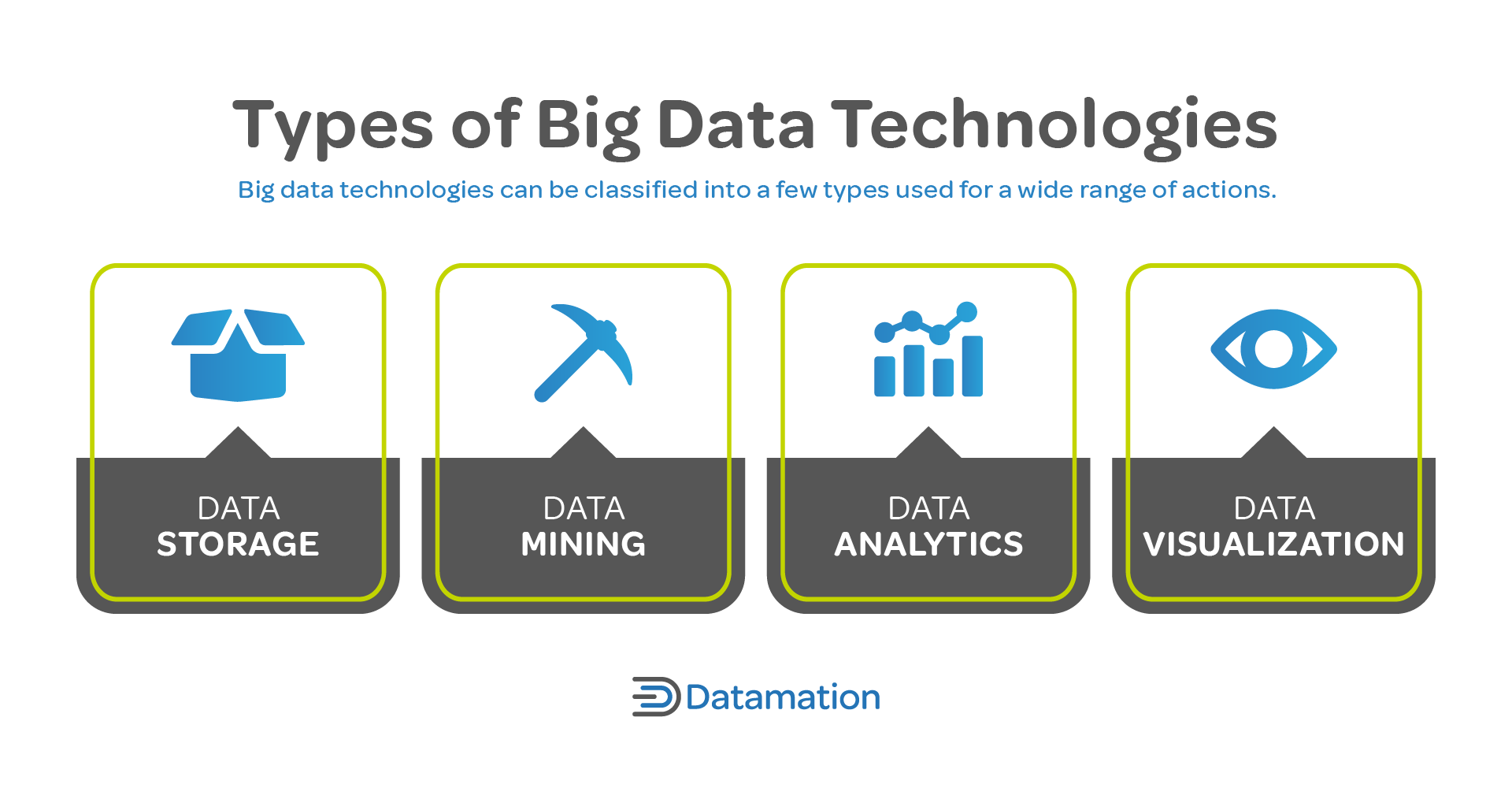

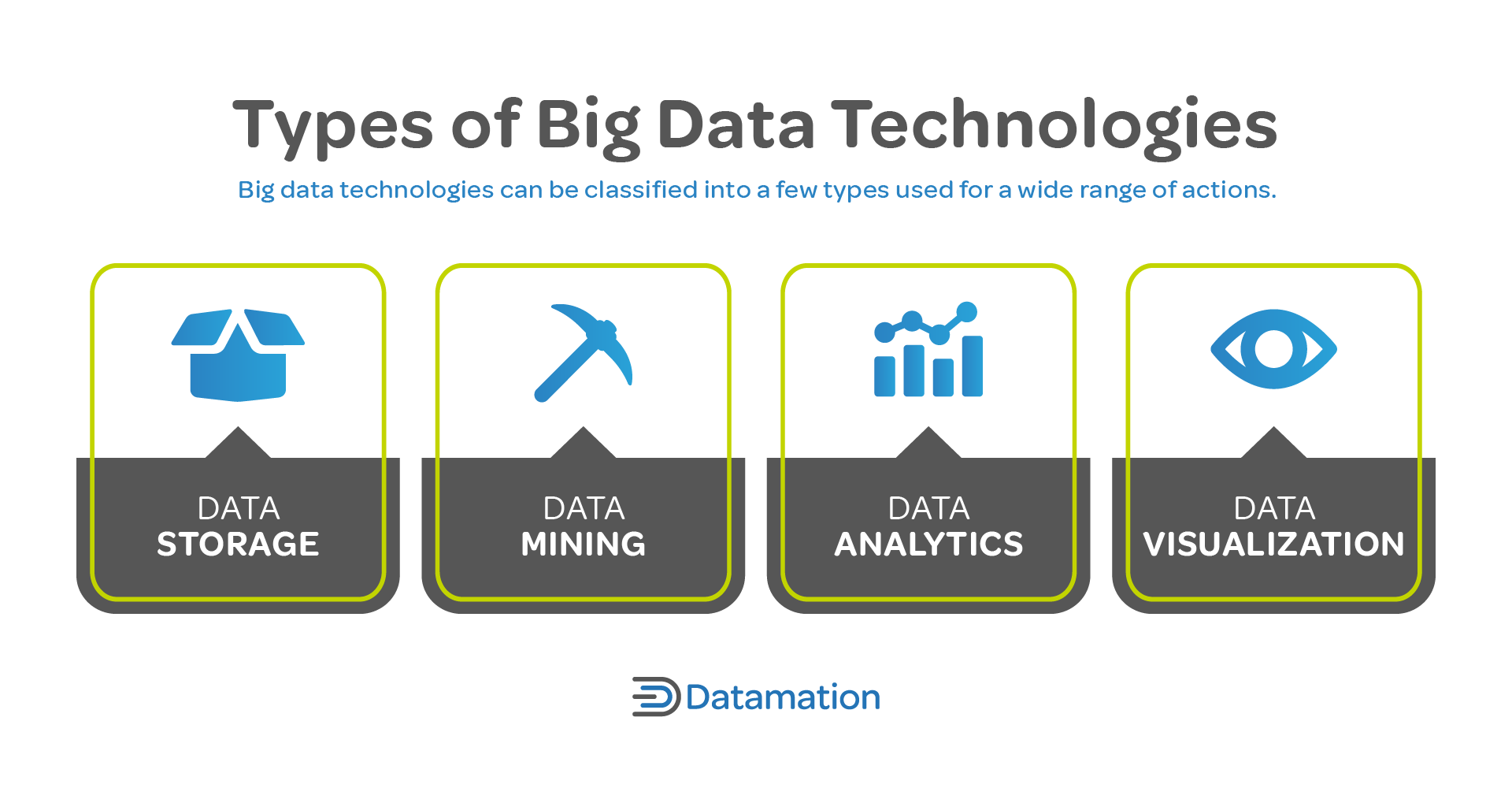

Types of Big Data Technologies

Big data technologies can be broadly classified into a few different types that together can be used for a wide range of actions across the entire data lifecycle.

Data Storage

Data storage is an important aspect of data operations. Some big data technologies are primarily responsible for collecting, storing, and managing vast volumes of information for convenient access.

Data Mining

Data mining helps unleash valuable insights through hidden patterns and trends for better understanding. Data mining tools use different statistical methods and algorithms to uncover usable information from the unprocessed data sets. Top big data technologies for data mining operations include Presto, Rapidminer, ElasticSearch, MapReduce, Flink, and Apache Storm.

Data Analytics

Big data technologies equipped with advanced analytical capabilities help provide information to fuel critical business decisions and can use artificial intelligence to generate business insights.

Data Visualization

As big data technologies deal with extensive volumes of data—structured, semi-structured, unstructured, and with different levels of complexities—it is essential to simplify this information and make it usable. Visual data formats like graphs, charts, and dashboards are more engaging and easier to comprehend.

Read Big Data Trends to learn more about the future of big data.

Top Big Data Technologies

The field of big data technology is constantly evolving to meet the needs of businesses, focusing on integrating crucial aspects of operations and information processing in real time—regardless of the complexity of the data. Here are the most widely used enterprise big data technologies in 2023.

Hadoop

Apache developed the open-source Java-based Hadoop framework for managing big data. It offers a distributed storage infrastructure for processing large and complex data sets, handling hardware failures, and processing batch information using simple programming models. Hadoop is largely preferred for its scalability—it can deliver highly available service for single servers up to thousands of machines.

Key Features

- Hadoop framework’s fundamentally resilient file system (HDFS) uses distributed clusters to store data for faster processing.

- MapReduce programming model optimizes performance and load balancing with parallel compilation capabilities to split large computations across multiple nodes and process tasks in batches.

- Built-in fault tolerance mechanism makes it highly flexible and reliable.

Spark

The Apache Spark open source analytics engine is a top choice for large-scale analytics, with 80 percent of Fortune 500 companies using it for scalable computing and high-performance data processing. Its advanced distributed SQL engine supports adaptive query execution and runs faster than most data warehouses. It also has a thriving open source community that serves as a connectivity platform for global users.

Key Features

- Integrates easily with frameworks like Tableau, PowerBI, Superset, MongoDB, ElasticSearch, and SQL Server.

- Convenient development interfaces support batch processing and real-time data streaming using Java, Python, SQL, R, or Scala.

- Exploratory Data Analysis (EDA) can run an array of workloads on single-mode machines or clusters.

MongoDB

Recognized as one of the leading big data technologies, MongoDB is a NoSQL database that helps create more meaningful and reactive customer experiences using AI/ML models. It combines data tiering and federation for optimized storage and has native vector capabilities that leverage large language models (LLMs) to build intelligent applications. MongoDB Time Series collections are cost-effective and optimized for analytical and IoT applications.

Key Features

- Integrates seamlessly with more than 100 technologies for efficient data operations, including AWS, GoogleCloud, Azure, Vercel, and Prisma.

- Expressive, developer-native query API brings enhanced performance and efficient data retrieval.

- Unifies diverse data services simplify AI operations and application-driven intelligence.

R Language

R is a free software environment that offers highly extensible statistical and graphical techniques for effective data handling and storage. Its integrated suite of software facilities helps with in-depth analysis and data visualization. Covering a wide range of statistical techniques including clustering, classification, time series analysis, linear and nonlinear modeling, and more, it’s a top choice for data computing and manipulation. The R language is a thoroughly planned and coherent system that can be used on UNIX, Windows, MacOS, Linux, and FreeBSD platforms.

Key Features

- Offers well-designed publication quality plots and visually appealing graphical techniques.

- Can be considered as a statistics system similar to S language.

- Simple and effective programming language model is ideal for computationally intensive tasks.

Blockchain

With the popularity of cryptocurrencies, Blockchain emerged as a top big data technology because of its decentralized database mechanism that prevents data from being altered. It is a distributed ledger that helps with immediate and completely transparent information sharing, ensuring a highly secure ecosystem. Although blockchain is extensively used for fraud-free transactions in the banking, financial services, and insurance sectors, other industries are also incorporating this technology for data accuracy, traceability, prediction, and real-time analysis.

Key Features

- Immutable records cannot be tampered with or deleted, even by the system administrator.

- Eliminates time-wasting record reconciliations within the members-only network, ensuring high-level confidentiality.

- Automatically executes smart contracts with embedded business terms, reducing complex, cross-enterprise business efforts.

Presto

This open-source SQL query engine is a top big data technology for data mining. It can efficiently combine relational and nonrelational data sources and run interactive/ad hoc queries for blazing-fast analytics. Some of the largest internet-scale companies including Meta, Uber, X, and more use Presto for interactive queries against their internal data stores. It offers a single ANSI SQL interface that can join the entire data ecosystem together and solve any issues at a large scale.

Key Features

- In-memory distributed SQL engine can run reliably at a massive scale.

- Neutrally governed open source project is suitable for on-premises and cloud integrations.

- Connector architecture provides for querying data at its stored place.

Elasticsearch

Based on the Apache Lucene library, Elasticsearch is an open source search and analytics engine that helps with big data operations. It uses standard RESTful APIs and JSON for data searching, indexing, and querying. This technology can be used for almost all data types, including numbers, texts, structured, semi-structured, or unstructured information. The primary use cases include log monitoring, infrastructure visualization, enterprise search, interactive investigation, automated threat detection, fully loaded deployments, and more.

Key Features

- Elasticsearch Relevance Engine (ESRE) integrates the best of AI and LLMs for hybrid search.

- Its powerful design architecture is implemented with BKD trees, column store, and inverted indices with finite state transducers.

- Efficient cluster management handles Handles millions of events per second.

Apache Hive

Apache Hive is a top choice for data warehousing to store and process large datasets efficiently. This open source framework is closely integrated with Hadoop, and is capable of massive-scale data analytics for better and more informed decision-making. It offers direct access to files stored in Apache HDFS or HBase, and lets you use Apache Tez, Apache Spark, or MapReduce for query execution. Hive can impose structure in formats like CSV/TSV, Apache Parquet, and Apache ORC.

Key Features

- Enhanced support includes multi-client concurrency and authentication.

- Hive Metastore offers a whole ecosystem of tools for better synchronization and scalability.

- Provides access to HCatalog for storage management and WebHCat for metadata operations.

Splunk

Splunk can competently handle complex digital infrastructures and explore vast depths of data, and improves digital resilience with comprehensive visibility, rapid detection and investigation, and optimized response. It supports building real-time data applications without large-scale development and programming frameworks and includes a suite of tools and technologies for machine-level intelligence integration, real-time stream processing, federated search, unified security and observability, and more.

Key Features

- Integrates with more than 1,000 sources for improved accessibility.

- Automated investigations and response provides elevated security operations.

- Offers full-stack visibility along with a 30 percent reduction in load time.

KNIME

The Konstanz Information Miner, or KNIME, is a complete platform for data science that helps create analytic models, deploy workflows, monitor insights, and collaborate across various disciplines. The low-code/no-code interface facilitates sophisticated analyses, workflow automation, interactive data visualizations, and other data operations. It is easy to use without advanced coding skills. KNIME provides users with a fully functional analytics environment that simplifies business data models.

Key Features

- Visual, interactive environment makes data preparation faster and analysis deeper.

- Intuitive UI includes thousands of self-explanatory nodes for creating workflows.

- Open source approach includes integrations with more than 300 data sources and popular ML libraries.

Tableau

Tableau is a common name in the data intelligence and visualization field as one of the fastest-growing big data technologies focusing on AI-powered data innovation. Its highly advanced features include multi-row calculations, line patterns, personal access token admin control, and more.

Key Features

- Built-in visual best practices allow for seemingly limitless data exploration.

- Analytics platform includes fully integrated AI/ML capabilities for visual storytelling and collaboration.

- Intuitive drag-and-drop interface makes insights and decision-making easier.

Plotly

Plotly is a premier platform for big data visualizations with cutting-edge analytics, superior graphs, sophisticated data pipelines, and a friendly Python interface that can easily integrate with existing IT infrastructures. Plotly also helps develop production-grade data apps to run modern businesses efficiently. It has a comprehensive design kit that eliminates the need to write CSS or HTML codes. Users can also use the ready-to-use templates and simplified layouts to arrange, style, and customize applications easily.

Key Features

- Python-based point and click interface is easy to use.

- Purpose-built platform makes deployments and developments reliable and scalable.

- Includes comprehensive support with guided installations and enablement sessions.

Cassandra

Apache Cassandra helps manage massive amounts of data with its masterless hybrid architecture and extensive data processing capabilities. Its distributed NoSQL database offers a flexible approach to schema definition and can handle disparate data types. Cassandra can be run on multiple machines as a unified whole and facilitates peer-to-peer node communication. Its self-healing feature adds to its resiliency and performance.

Key Features

- Rapidly scalable cloud databases support multiple data centers.

- Robust and resilient structure offers indefinite linear scalability.

- Replication factor enables multiple replicas for the same data, ensuring reliability and fault tolerance.

RapidMiner

Widely used for data mining and predictive analysis, RapidMiner is a popular big data technology with an excellent interface that assures transparency. Its visual workflow designer helps create engaging models for data representation for better insights, and the project-based framework offers complete visual lineage, explainability, and transparency.

Key Features

- Supports the full analytics lifecycle, including ModelOps, AI app building, data visualization and exploration, collaboration, and governance.

- More than 1,500 built-in functions replicate code-like control.

- End-to-end automation and augmentation makes for fast and intuitive data preparation.

Bottom Line: Big Data Technology Across the Data Lifecycle

With access to the right data, enterprises can uncover hidden patterns and correlations through advanced analytics and machine learning algorithms. But as data volumes increase, they must find ways to integrate big data technologies and build data processing capabilities that can handle such large and complex datasets.

Big data technologies like those in this guide make it possible for businesses to gain a deeper understanding of market trends, customer behavior, and operational efficiencies by working with data at all stages of the lifecycle and uncovering the insights it contains.

Read 10 Best Practices for Effective Data Management to learn how enterprise organizations take control of the massive volumes of data they rely on for insights.